Product Design | B2B SaaS | Q4 2018 - Q3 2020

Design for an AI-powered feature that scaled an enterprise platform, helping professionals analyze and extract insights from large document sets in high-pressure workflows.

Before LLMs became commonplace, AI software was already being used in legal technology for conducting document review. Within mergers and acquisitions (M&A), lawyers need to comb through large amounts of contracts and other documents in order to find any critical risk factors. This process is like finding a needle in a haystack and lawyers would rather focus more on deeper analysis of potential risks.

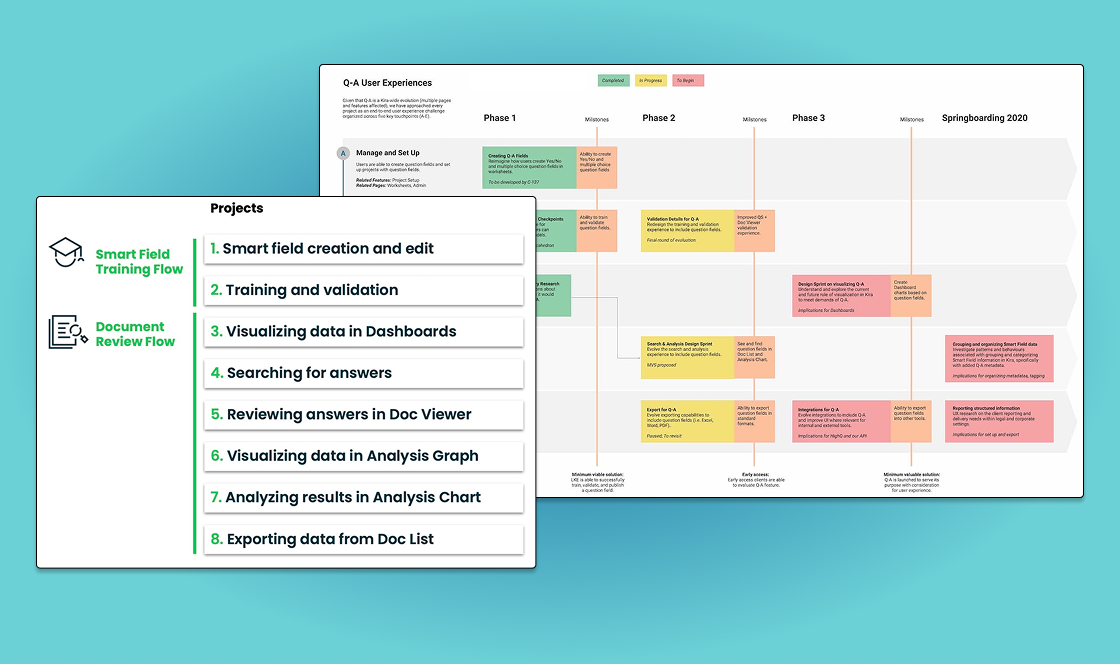

I worked with a cross-functional team that included a product manager, UX researcher, quality assurance engineer, and software engineers. At the beginning, I collaborated closely with the product manager to define the value proposition of the feature and to break down the large scope into eight sub-projects that were manageable to deliver. Each sub-project coincided with an area of the application that needed incorporate the “answers” feature such as model training, document review, and export. Each sub-project went through an end-to-end design process, from user research through to development.

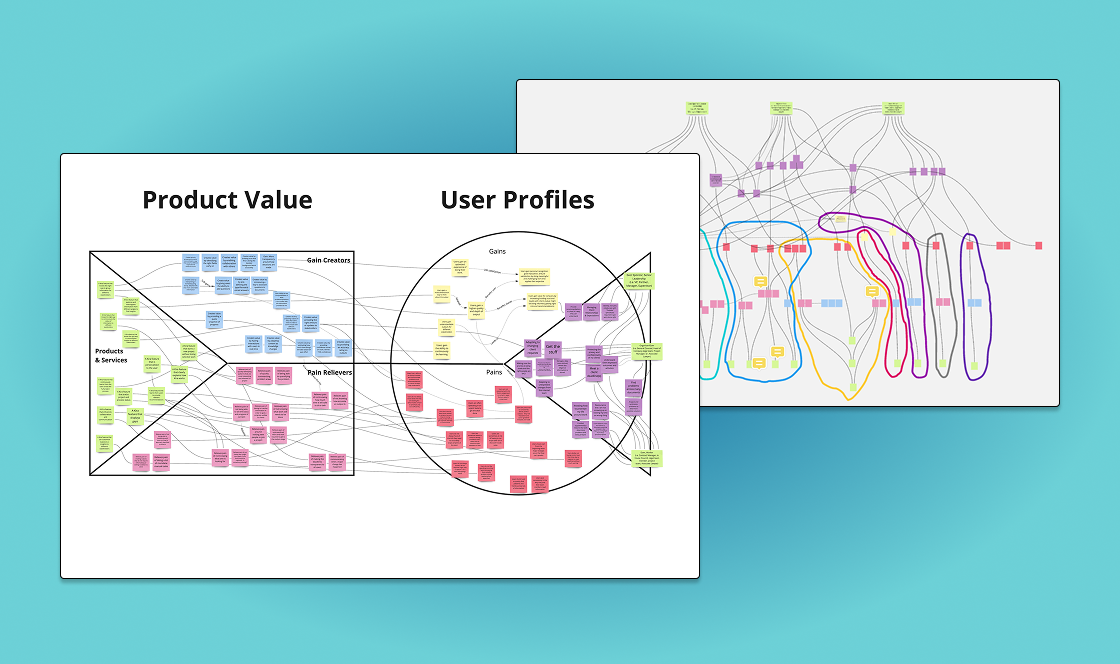

Defining value proposition

Planning and early discovery

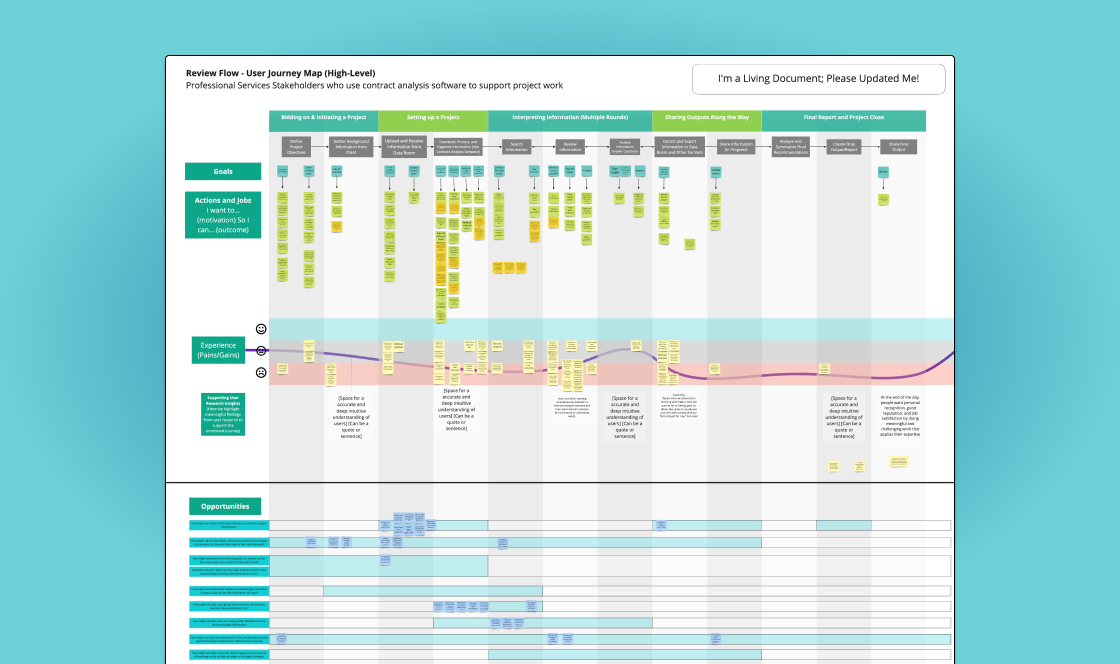

High level journey for bigger picture context

Sub-flows for each project

Example of sandbox design exploration

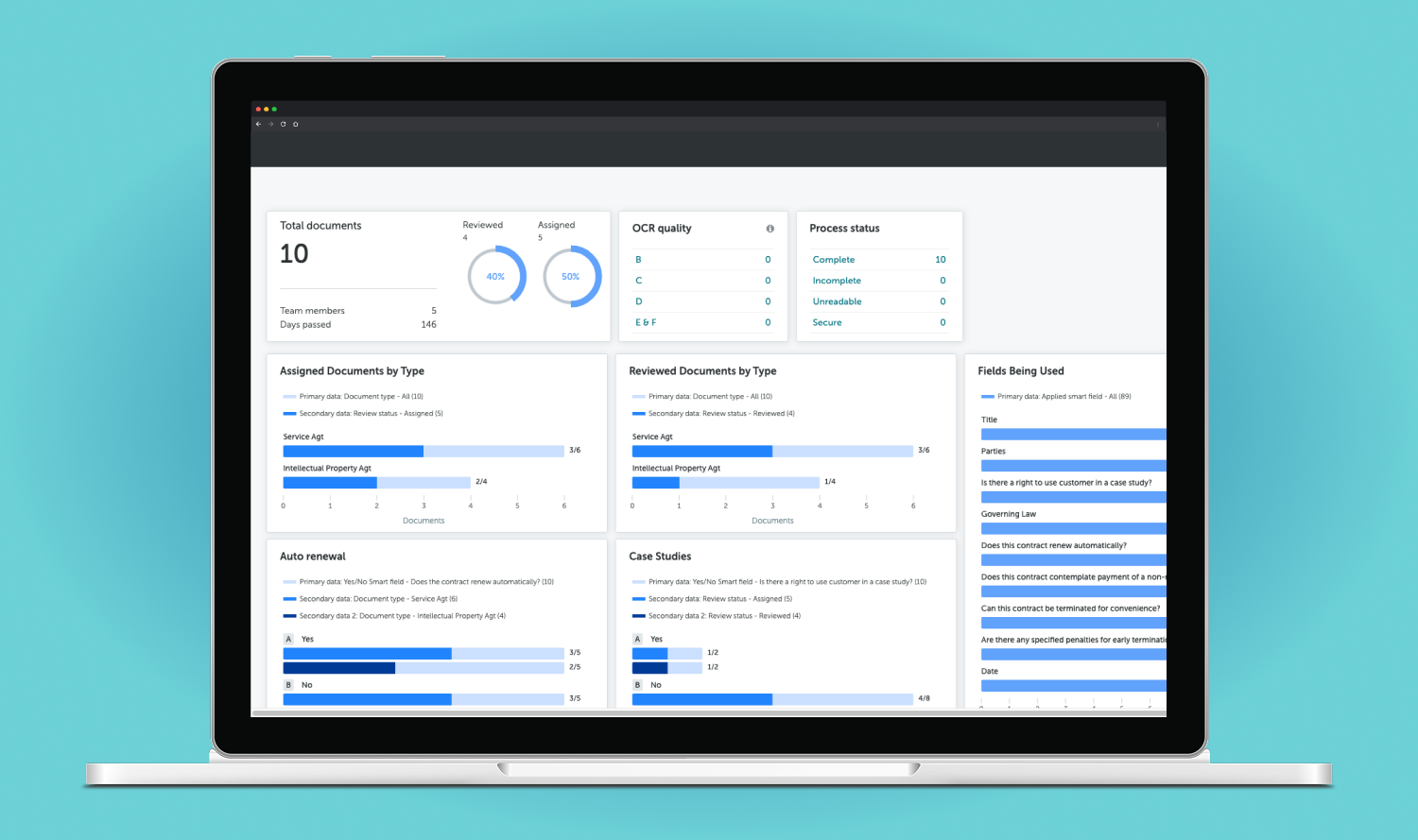

Walkthrough of high-fidelity designs

Once all of the sub-projects were built and tested, our team collaborated with internal customer success and sales teams on a go-to-market strategy. I took part in an early access program to get feedback and address some of the initial feature barriers. This was an industry leading feature at the time and the product has since evolved in incorporate additional AI-generated summary capabilities.

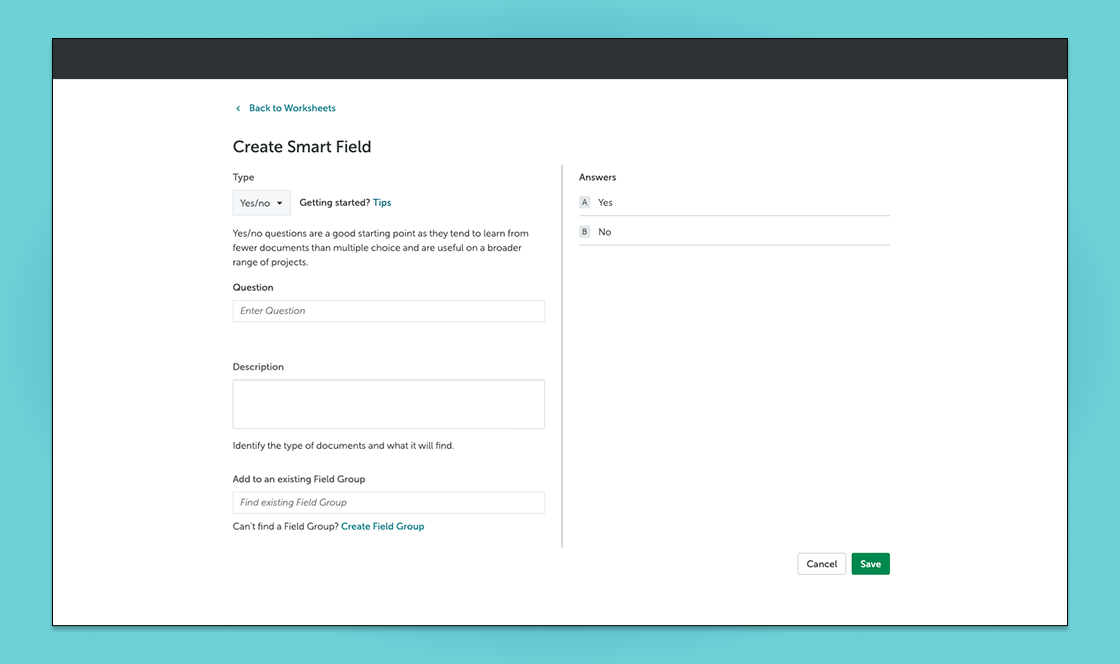

Step 1: Create Answer ML model

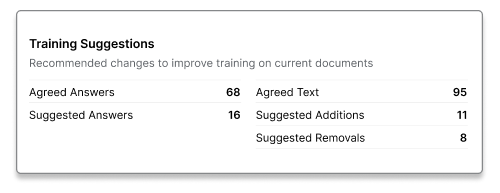

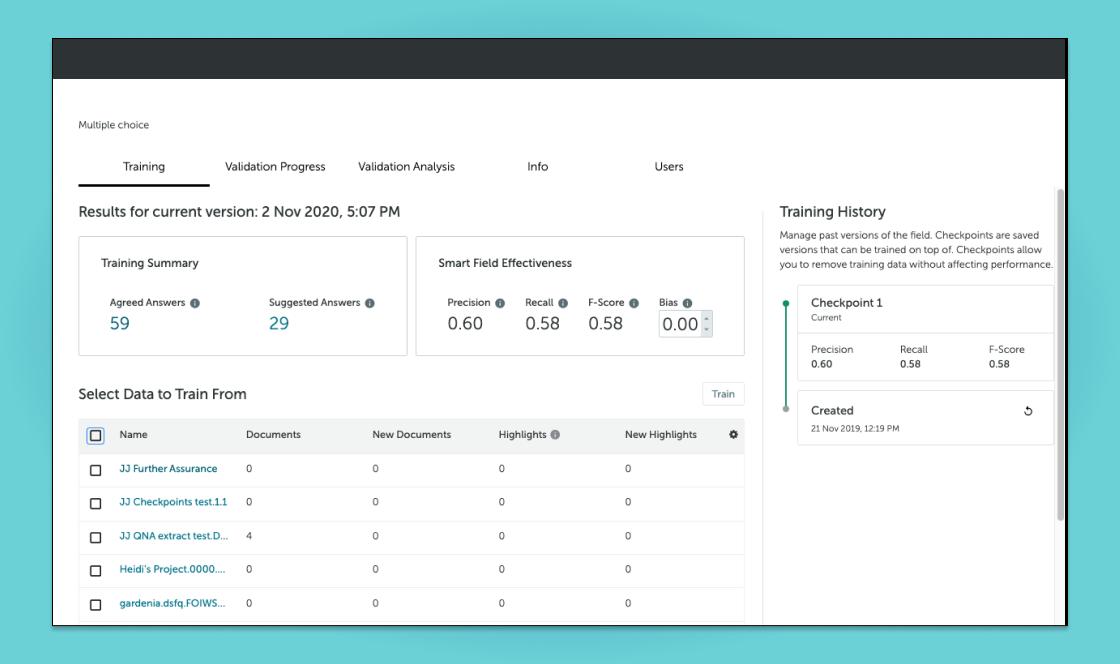

Step 2: Train Answer model

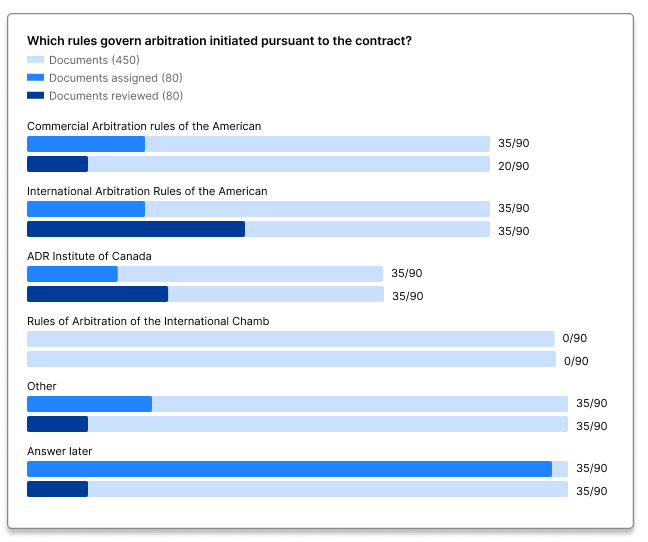

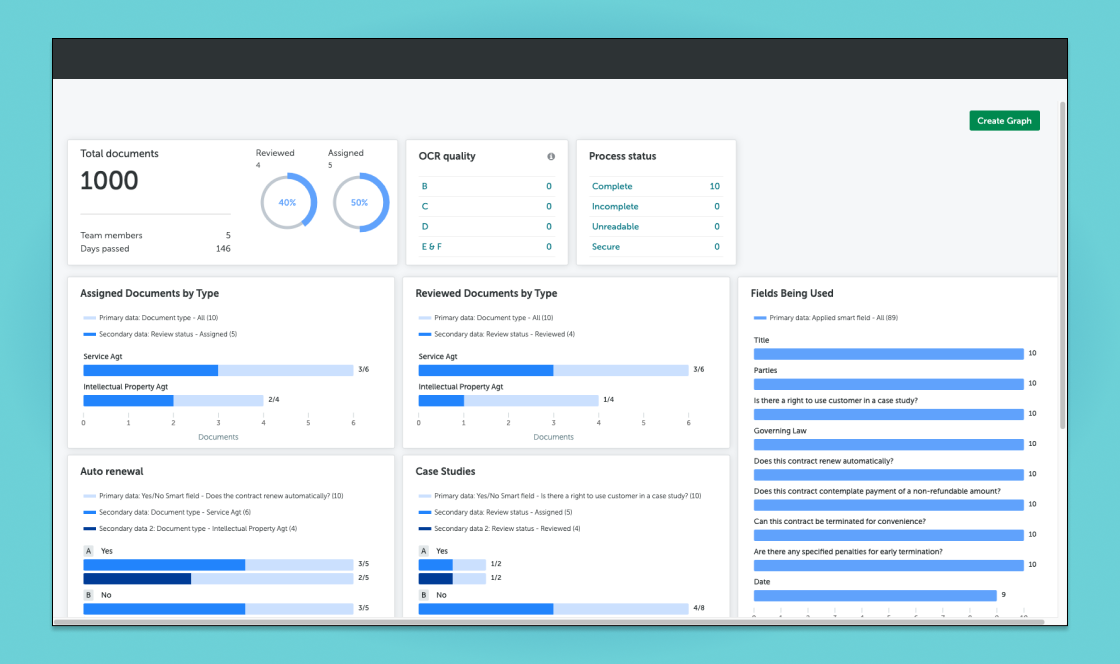

Step 3: Apply Answer model and view summary

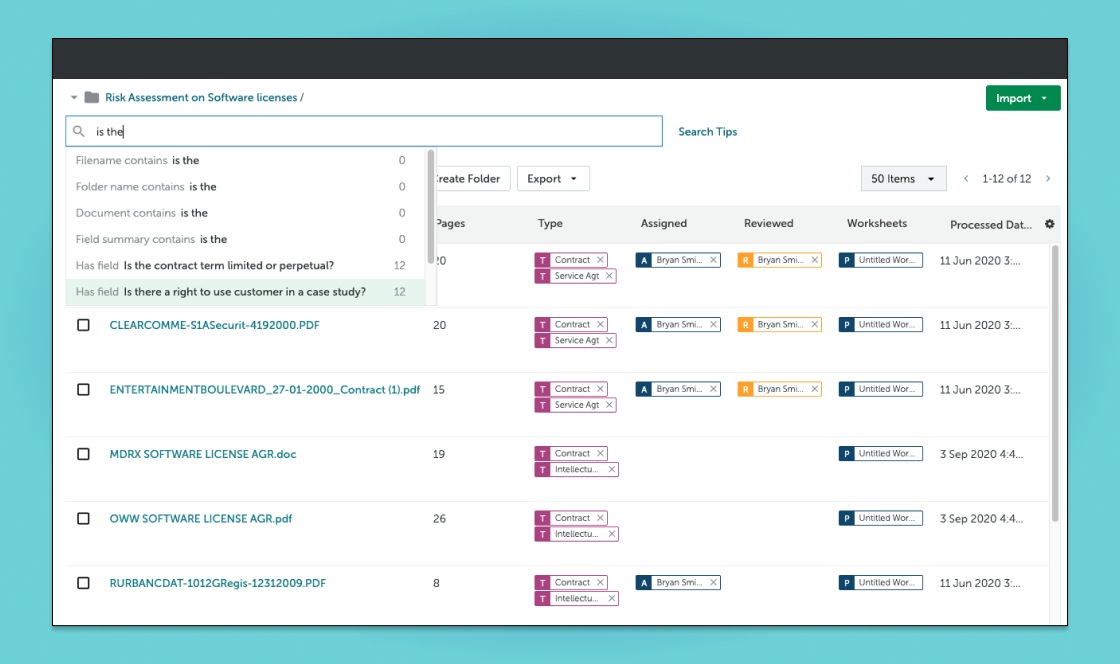

Step 4: Search for document results

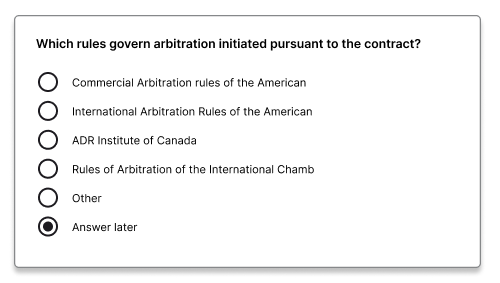

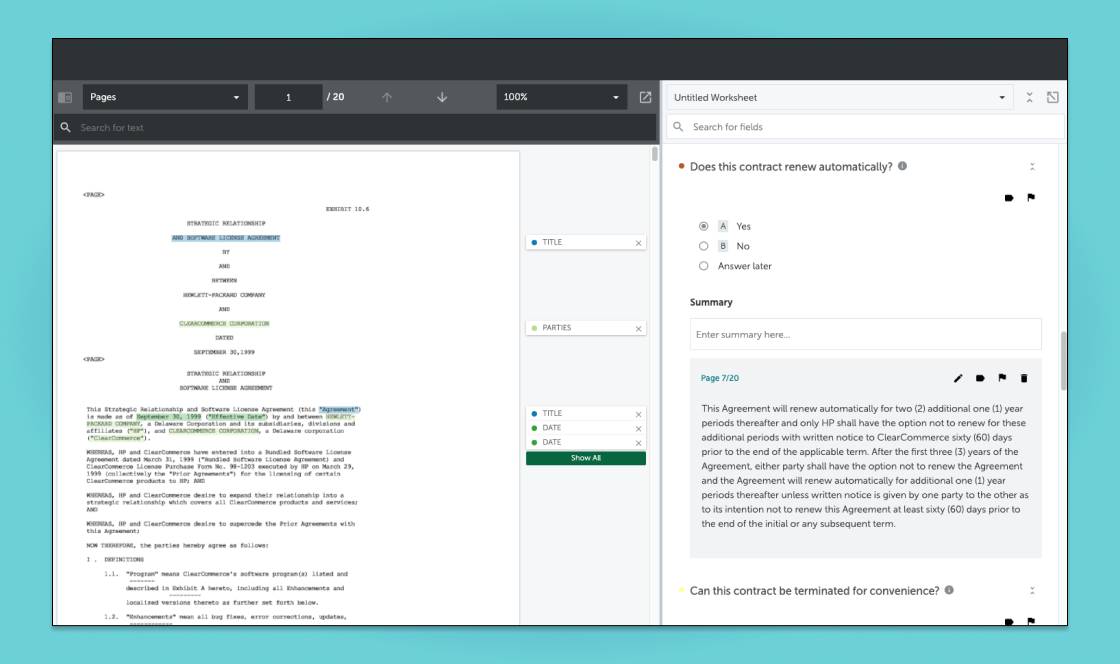

Step 5: Review results to validate answers

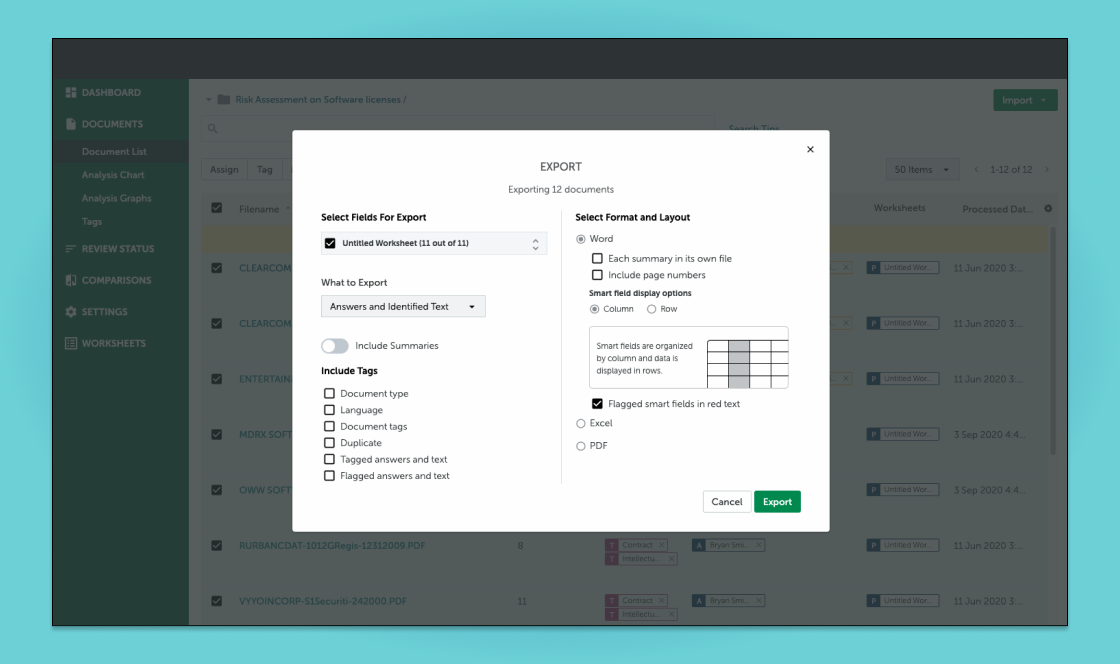

Step 6: Export answers in different formats